About

Hi, nice to meet you! My name is Anita Hu. I am a second year Mechatronics Engineering student at the University of Waterloo. I am currently a full-time student, the Perception Manager of the WATonomous student design team, and a part-time Undergraduate Research Assistant in CogDrive.

During my first internship in summer 2019, I worked as a computer vision developer at Miovision where I dived into object detection neural networks and pattern recognition with K means and Gaussian mixture model. Through my involvement in WATonomous, a student design team at the forefront in the design and creation of autonomous self-driving vehicles, I have developed deep interest in perception for autonomous vehicles and gained hands-on experience with ROS, computer vision, and deep learning.

I am always up for a challenge and looking forward to my next internship opportunity!

Skills

Languages

Frameworks

Tools

Experience

Computer Vision Developer

Miovision | May 2019 - Aug 2019

- Automated turning-movement-count template generation from vehicle tracks using Kmeans clustering, Gaussian mixture models, and least-squares optimization.

- Rearchitected layers of YoloV3, as the first proof-of-concept for future Tensorflow models, to be compatible with Miovision's existing SSD framework.

- Significant customer impact with over 50% error reduction through continuous-iterative SSD model training.

- Reduced development iteration time through stochastic gradient descent with restarts that resulted in 100k fewer training iterations.

Perception Manager

WATonomous | May 2019 - Present

- Responsible for assignment and prioritization of OKRs, cross-team integration, and in-car operation of the software pipeline and sensors.

- Integrated YOLO Darknet models in ROS with OpenVINO.

- Trained semantic segmentation model for road and road line detection.

- Trained YOLOv3 model for pedestrian, cyclist and vehicle detection.

Object Classification Subteam Lead

WATonomous | Jan 2019 - Apr 2019

- Trained SSD MobileNet model in Tensorflow to detect 15 traffic sign classes with 0.95 mAP.

- Developed ROS nodes for traffic light, traffic sign and obstacle detection that subscribes to camera frames and publishes detection messages.

- Integrated Tensorflow models in ROS with OpenVINO.

Perception Core Member

WATonomous | Sep 2018 - Dec 2018

- Generated synthetic scene images containing traffic lights using Cut, Paste and Learn method.

- Trained TensorFlow object detection model to detect and classify different types of traffic lights.

- Used OpenCV to develop a traffic light state detection algorithm that is robust for all traffic light shapes.

Awards

Hack the North 2019 Finalist

Top 13 out of 1500 participants.

Awarded for AR Voice ML Ninjutsu Battle Simulator project at Hack the North 2019.

Voiceflow API Prize

API Prize out of 20+ teams.

Awarded for AR Voice ML Ninjutsu Battle Simulator project at Hack the North 2019.

Best Use of Learning in Hack

Category Prize out of 400 participants.

Awarded for VisionAI project at Hack the 6ix 2018.

Hack the North 2017 Finalist

Top 14 out of 1000 participants.

Awarded for BitToBin project at Hack the North 2017.

Projects

AR Voice ML Ninjutsu Battle Simulator

The battle simulator is a game where two players brawl off in the battle of Naruto ninjutsu hand signs. Each player has an anime figure. When ninjutsu is cast, the effect is projected on the anime figure as a hologram. The machine learning model can identify 12 unique hang signs from the anime Naruto and different anime cards. Each ninjutsu consists of multiple hand signs.

Python, Keras, OpenCV, Flask, Voiceflow API, React

Hand Gesture GUI Control

This program enables touchless control of the mouse and keyboard keys using hand gestures. Based on the number of fingers detected, the user can switch between two modes: controlling the mouse or the keyboard. Mouse controls include controlling the mouse position, clicking, scolling, etc. allowing basic computer navigation. The keyboard controls include arrow keys and space bar allowing user to play games with gestures.

Python, OpenCV, PyAutoGUI

Claw Machine Bot

Claw Machine Bot was built with LEGO and programmed on a LEGO Mindstorms EV3. It has two game modes:

- Competitive mode: the user and robot compete in a total of 3 rounds. A random colour is chosen and the user will first attempt to grab an object of that colour. If the user fails, the robot will attempt to do so autonomously using EV3 sensors.

- Casual mode: user controls the claw to pick up an object using EV3 buttons like a normal claw machine.

RobotC, LEGO Mindstorms EV3

Vision AI

Vision AI is a wearable for the visually impaired. Our solution consists of a camera connected to a raspberry pi that is attached on a backpack. A google home mini or any Android device with the Google Assistant will be used to issue commands to in order to visualize the surrounding environment. It has two modes:

- A brief description of the surrounding environment, giving the user more sense of what is around him/her.

- The ability to read typed or hand written text.

Python, OpenCV, Flask, Tensorflow, Google API

BitToBin

BitToBin is a low-cost automatic waste sorter designed to stop waste comtamination in its tracks. Through machine learning and object recognition, BitToBin sorts and deposits waste into compost, recycling and garbage bins as each waste item is placed on the opening platform.

Python, Arduino, Clarifai, OpenCV

Face Recognition Door

Face recognition door is a door controlled with a Tkinter UI that unlocks with face recognition. OpenCV LBPH Facerecognizer was used to train captured images of the new face and outputs a trained .yml file for face recognition. The names are stored in the SQLite database in key-value pairs (name and id). When a person is recognized at the door, the person's name is fetched from the database using the id of the matched face and is displayed on the UI. The Arduino will receive a signal to rotate the servo and unlock the door.

OpenCV, Python, Arduino, Tkinter, SQLite

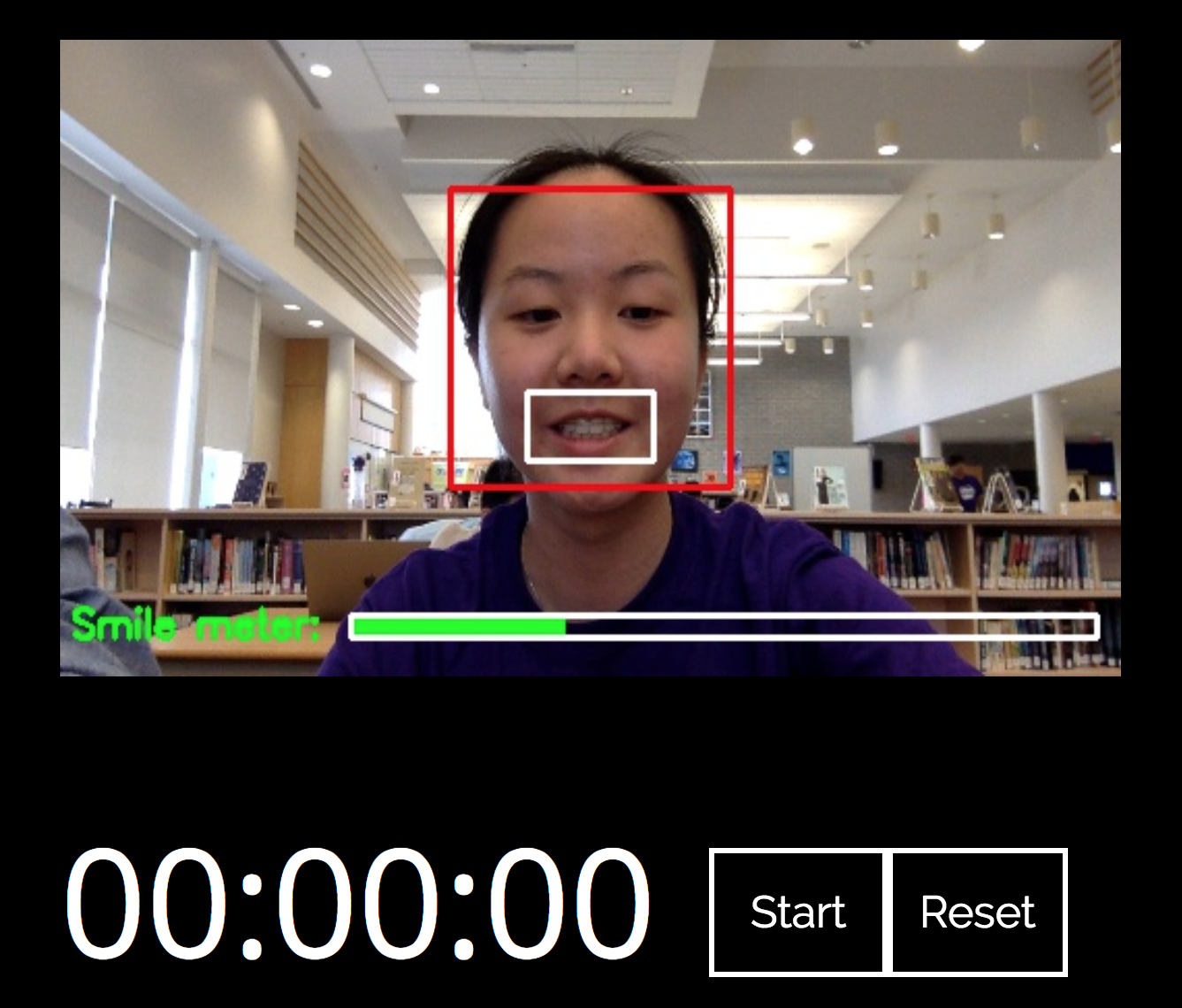

Presentation Buddy

Presentation Buddy is a website designed to give real-time feedback to the user's presentation. It contains a live video stream with a smile meter that fluctuates according to the user's facial expression, a timer and presentation tips that refresh every 5 seconds.

Flask, OpenCV, Python, HTML, CSS, Javascript

NewtSnap

NewtSnap is a mobile web app that provides an efficient and simple way to take notes. It takes in an image (from file or camera) through HTML forms and outputs a string of text using ocrad.js that can be edited or deleted. The notes are saved using local storage so all the notes remain there until you delete them or clear your browser history. The notes can be read out loud using responsivevoice. This allows the user to listen to their notes on the go. It can also allow visually impaired users to view their notes with ease.

HTML, CSS, Javascript, jQuery, ocrad.js, responsivevoice.js